Its applicable to KDE mainly, but is also valid for other DEs.

To make things short here’s the bug roport with numbers affected (before and after the fix is applied). It’s a ready solution.

bug kde dot org:

bug.cgi?id=484317

Explanation:

Someone in KDE had proposed to compress all big PNGs wallpapers to loseless JXL. According to my testing loseless JXL is very slow: both to compress and decompress. And loses big time (lossy, just like jpg is lossy) to avif (av1, aom).

The savings would be only 10 MB to lose very much of the speed.

Current situation is also bad. PNGs are way too big. They have to be:

- read from the disk (big file)

- slow to decompress (“9” and just being png compression)

- they have to be read 3 times (I hope you use the same):

a) loging/sddm

b) lock screen

c) wallpaper

The numbers in the bug report say everything for themselves. Listed optimizations are also there, so I will no repeat everything as its all there already.

P.S. As for loseless cwebp (vp9, -z9, -mt) it beats loseless cjxl by a mile (its much faster to decompress and compress comparing to cjxl. So please do not follow kde proposal to slow down the computer to save 10 MB. They should first read their own bug reports! Its all there!

Here are KDE6 optimized wallpapers (you crunch the numbers yourself: size and read speed).

Light version (all resolutions):

https://upload.adminforge.de/r/wpLa_YHMSj#D1nbgIuvilOB0ozAAGuYruFgnXUt5lJH6I0zgLFk2tg=

Dark Version (all resolutions):

https://upload.adminforge.de/r/B8NgWUcDSy#+QgPMKZP0ewhYCTIdg8MceVUuqPEh+VlbmyceyOUzo4=

Enjoy faster decoding and read speed!

P.S. Such as small thing, but why nobody has ever thought about it…

2 Likes

Hi,

thanks for your suggesting.

Actually this would be a really small improvement, but I think KDE could think about building it directly into their Wallpaper feature e.G converting Image to xyz when its added.

I will check out the bugreport.

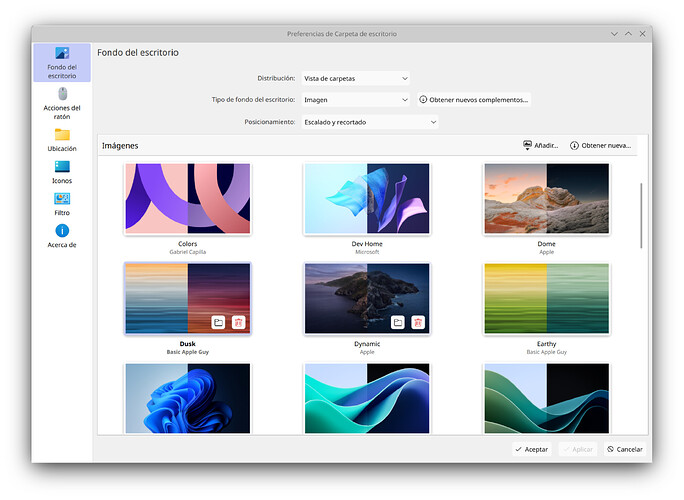

Interesting… (Maybe related) In my case, some time ago I wrote a small script to generate light/dark images automatically.

I wrote a function to detect the monitor resolution, so when you take the base image, it does a downscale depending on the monitor resolution. This way there is no need to have 19 images with 19 resolutions.

function get_monitor_resolution() {

local resolutions

resolutions=$(xrandr | grep -oP '\d+x\d+' | sort -rV | head -n1)

echo "$resolutions"

}

In addition, it also generates the metadata, in which you can specify the author of the image.

function create_metadata_file() {

local authors_name=""

if [[ "$1" == "--authors" && -n "$2" ]]; then

authors_name="$2"

fi

for name in "${!wallpapers[@]}"; do

local file="${wallpapers[$name]}"

if [ -d "$file" ]; then

local destination

destination=$(prepare_destination_directory "$file")

local wallpaper_name

wallpaper_name=$(basename "$file")

local metadata

metadata=$(generate_metadata "$authors_name" "$wallpaper_name")

echo "$metadata" > "$destination/metadata.json"

echo "Creado metadata.json en $destination"

else

echo "Advertencia: $name no encontrado."

fi

done

}

If anyone is interested, you can try it. It would be nice to incorporate what the colleague says to even optimize the image a little more. Download example here

Usage: ./main --generate --authors "CachyOS"

I tend to have a lot of stuff like that, but I don’t usually post anything. The script you have to edit it to specify the name of the folder containing the images, the images must contain the specific name “light.png” for the light image, and “dark.png” for the dark image, then, the magic happens.

Some messages are in Spanish, because well, I never thought of sharing the script.

2 Likes

This bug report mostly talks about file size, not performance. There are claims of decoding speed without providing any evidence/benchmarks. And I’m not aware that anyone would claim that newer codecs are less computationally taxing that older ones (in an png vs avif, jxl situation).

Of course it’s fine to optimize disk space, but the value we really care about should be the footprint of the decoded image in memory. Is there any suggestion of a (significant) difference in either way?

Avoiding the read of additional 4 MB at boot on a system with an old fragmented SATA-1 HDD but a 5k display could be significant, but I have some doubts.